How should you deal with the non-human traffic?

Our research shows that 30–60% of a typical website’s traffic isn’t human. The number of visits by AI crawlers, bots, and automated agents has increased significantly. They don’t buy products or improve engagement—but they do trigger all the same processes as real customers, such as API calls and server requests, cloud function executions, data pipeline ingestion, and event logging in Martech and AdTech.

Every one of those actions carries a cost. For brands, that means paying for capacity that doesn’t always drive value. The bots don’t benefit from personalization, such as loading extra scripts (e.g., recommendation engines, tracking pixels) or fetching user-specific data.

Why It Matters Now

Traditional bot traffic has been relatively easy to filter and understand—search engine crawlers and known bots often declare themselves. Today’s AI-driven crawlers are different. They mimic human behaviour, load full pages, and even trigger events. That makes them harder to detect, but still very important.

This traffic has a couple of essential things to consider:

- IT Budgets Inflate – Servers, APIs, and cloud services scale to support traffic that’s not real.

- Analytics Lose Accuracy – bots skew KPIs, engagement metrics, and conversion ratios.

The Gravito Solution

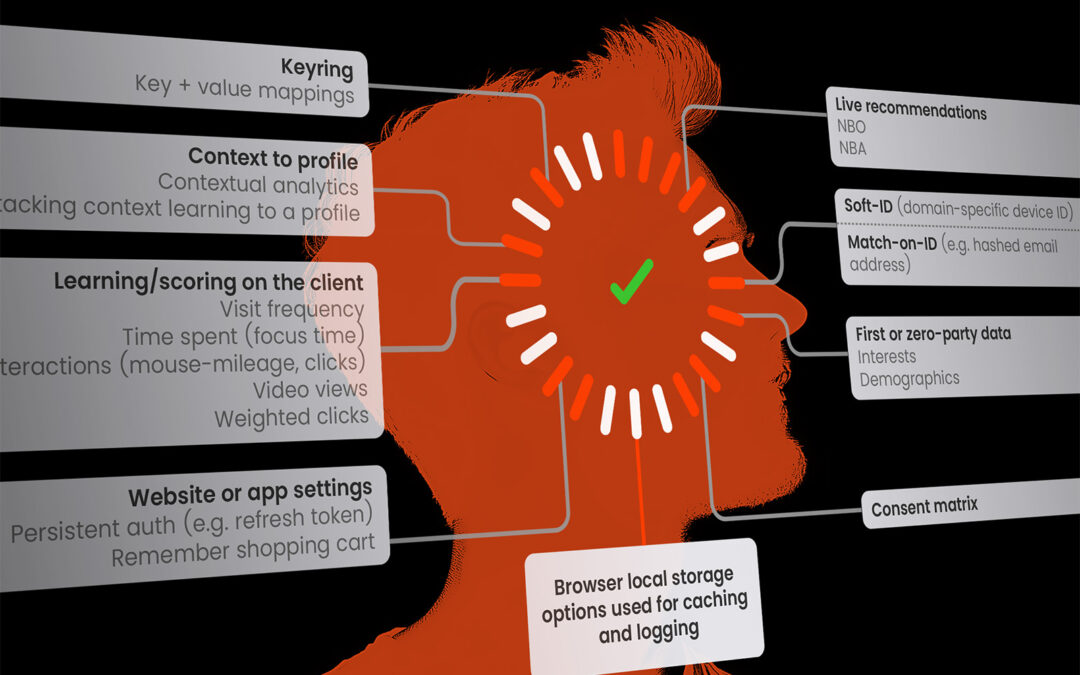

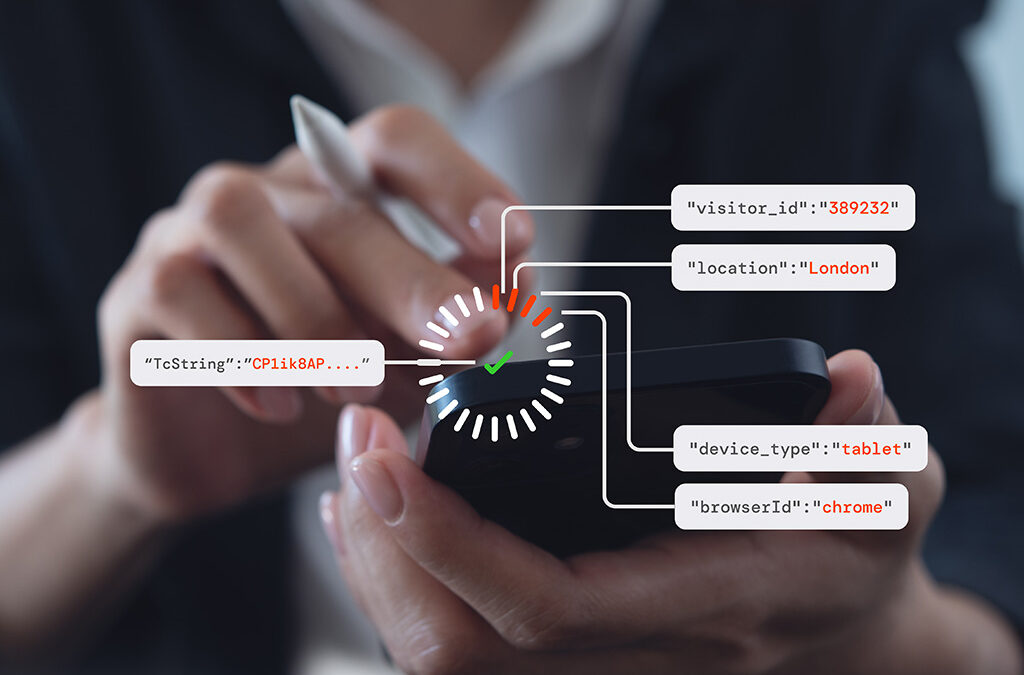

This is where the Gravito human detection changes the game. Instead of traditional bot detection, the solution does human detection already during page load. This feature allows you to decide which backend calls and technologies are applied only to humans. It’s important to understand that many AI Crawlers and bots are beneficial and that you want them to visit your site. And you still need Googlebot, Bingbot, etc. to index and rank your content, with full access to public content, the navigation structure, and the sitemap without being cloaked.

BUT at the same time, personal and dynamic experiences should be addressed only to humans. This includes real-time personalization (recommendations, dynamic odds, product bundles), recently viewed or “For you” content, and loyalty or VIP features. These require session data, behavioural analytics, and cookies — all of which should never be wasted or polluted by bots.

With Gravito, you can trigger your desired marketing, advertising, and care-related engagements only to real humans, and quit wasting resources on machines when it doesn’t make sense. This reduces spending on cloud, technology, and infrastructure while improving system efficiency and reliability. It also strengthens the credibility of data-driven decisions and supports sustainability goals by cutting unnecessary resource consumption.

It’s a rare opportunity: cost savings, operational efficiency, and sustainability improvements—all from the same action.

What next?

In the age of AI crawlers, ignoring non-human traffic is no longer an option. For DTC eCommerce brands, the question is not whether bots are visiting—it’s how much they’re costing you.

If you want to understand how this problem should be solved and what steps to take, book a session with our team to hear practical strategies for getting the most out of both AI and Real Human traffic.